I want you to imagine a you just bought a second-hand car. Let’s say, a ‘91 Toyota Corolla. It drives fine, but when you check the internals … it’s a mess. Some madman has totally rewired it based on no plan known to god nor mechanic: there’s solder everywhere; there’s blowtorch burns so extensive you can smell them on a hot day; there’s a bunch of random LEDs that don’t seem to do anything, but if you take any of them out the car won’t start. You’re convinced that this whole thing is going to explode if you take it above 55km/h.

You spend weeks rewiring it. You can’t get it to look anything like a factory model, but when you’re done you’re at least convinced you can use the cupholder without cutting your hand off. Damn, you’re good—it was a pain in the ass and you had to disassemble a string of Christmas lights for some extra LEDs, but you’re proud of your work.

If the car were a piece of software, the correct way to describe your repairs would be hacking a hack. And if somebody else managed to gain remote-control of your car using an issue in the old wiring that you missed (who installs a mini-microwave under the engine? Why does the microwave have wifi?) and proceeds to plow it into a nanna crossing the road at 10am, then they’ve hacked your hacked hack.

Do you see why this is a problem?

Here’s an incomplete list of things I have heard developers call ‘a hack’:

- Intentional unauthorised access

- Unintentional unauthorised access

- Any malicious code inserted into any device

- Any exploit whatsoever

- Good, clever, well-made code (written by you)

- Terrible, no-good, jerry-rigged code (written by somebody else)

- Any code whatsoever (written by anybody at all)

If it helps, consider lifehacks. We’ve all seen them: stuff like putting a rubber band around a paint can so you can wipe your brush on it. They’re probably the closest the general public gets to the tech definition of ‘hacking’—No.8 Wire solutions that look a bit janky but can do a good (or better) job than using the tool in the intended fashion. And, like software hacks, a lot of them are profoundly worthless and will make your microwave explode. An intentional hack that results in unauthorised access is, well, hacking the code—using the tools available to improvise a way into a secure area. The very first hackers were folks in the 1950s who figured out you could ‘hack’ phone lines by playing the right sounds at them to make free telephone calls. Often it involved using a high-tech “blue box” but sometimes all it took was a 5c tin whistle tuned to the right note.

I briefly mentioned ‘cracking’ in the original budget piece, and that’s a more common (if a little dated) term among developers and InfoSec folks to refer to intentional malicious penetration of a system. Some hacks are cracks, but not all cracks are hacks. Using a specific tool designed to penetrate a secure system is cracking, and probably best fits the public understanding of a ‘hack’. It isn’t a hack, though—it’s using the tool for its intended purpose.

OR Hacking and Cracking are the same and both only refer to unauthorised access, but one is good and one is bad OR it’s actually Hacking and Cracking and Packing, which is about politics and gerrymandering and not about tech, unless you decide it isn’t and start a fight about it in the Burger King parking lot OR it’s a sort of random gapfiller that helps to give shape to vague tech ideas that don’t have a name. The public definition has bled into the professional one and now it’s hard to tell what anybody is talking about. It’s a rubber band of a word: a wibbly, stretchy, useful fix in your day-to-day, but not great as a permanent solution. The word ‘hack’ is, ironically, a bit of a hack.

It gets even more complicated when—like it did with the 2019 NZ budget breach—the word crashes into the public sphere. While developers use the term too broadly, the discourse uses it far too narrowly. We’ve seen this over the last few weeks: people arguing over whether it means Intentional Unauthorised Access Against A Perfectly Secure System or whether we’re allowed to broaden it as far as Intentional Unauthorised Access Facilitated By Poor Information Security Practises and meanwhile developers are in the corner shouting “Shit, this whole network layer is just a bunch of hacks. I hacked their hacks and now I’ve just gotta hope we don’t get hacked.”

Although just for the record, if you’re in that former camp re what hacking is, how good does security have to be before something counts as a hack? Because no encryption is perfect. One of the better forms of encryption—often used by governments and the intelligence community—is called PGP, or “Pretty Good Privacy”. The name is partly a joke, but it’s also a tacit admission to what everybody in this business knows: everything has got a back door somewhere, even PGP. Quinn Norton’s wonderful essay Everything is Broken is absolutely required reading here; there’s back doors everywhere. Even if the code is perfect (which it ain’t—even the best developers in the world have bad days), that back door is a curious intern who picks up a USB in the carpark, or a security guy new enough to not know all the faces, or an IT guy with a porn addiction.

Perfect protection is impossible, and “good enough protection” by the standards of politicians and pundits is a goalpost that moves depending on who’s doing the kicking.

Which is a big part of the reason all this debate around hacking has made the actual InfoSec community so annoyed: people who don’t know what they’re talking about are using words they don’t understand to score political points. It’s also not surprising when they do it: Makhlouf and Robertson weren’t wrong when they called it a hack, nor were National wrong for saying it wasn’t. I’m not one of those “both sides have a point” guys (God forbid) but both sides in this case are right, but they’re right because the discourse sucks. They weren’t lying when they used it that way, because that’s how it’s used: to mean a different thing depending on what you need from it. They’re right because they’re haggling over the definition of an incredibly vague term that not even the people using it professionally can agree on.

It falls to us as people who actually pay attention to these things to elevate the discourse, or we’re again going to have to deal with the spectacle of the most powerful people in the country flicking rubber bands at each other and claiming they’re bullets.

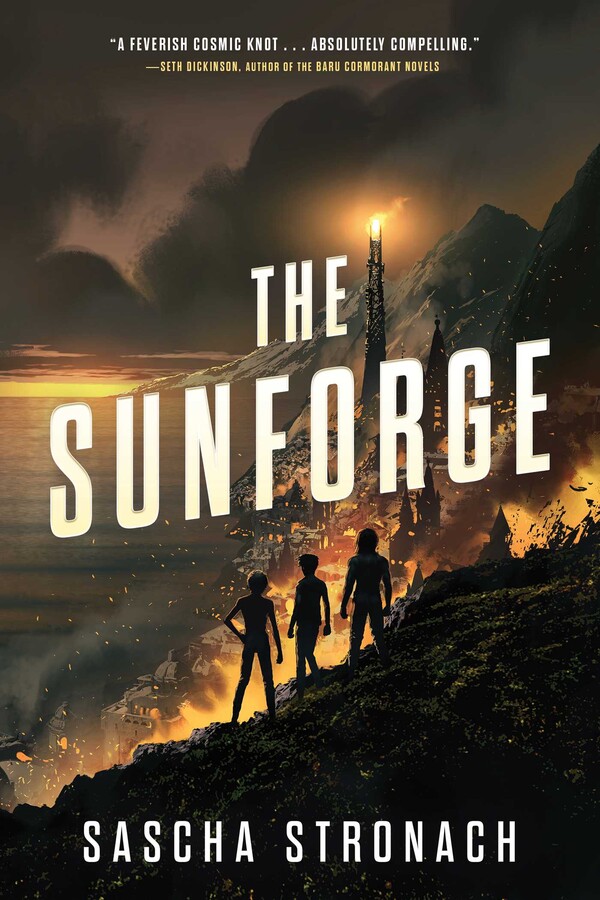

Alexander Stronach is an author and editor from Wellington, New Zealand. You can find him raising hell on Twitter @understatesmen, or on the roadside shouting at passing cars.